About Us

We aim to provide the most cost-effective and secure AI inference platform.

No restrictions

Your requests are never logged and will never be blocked, the LLM will always get what you send it.

You are in full control of the model you are interacting with.

Money-back guarantee if you are not satisfied.

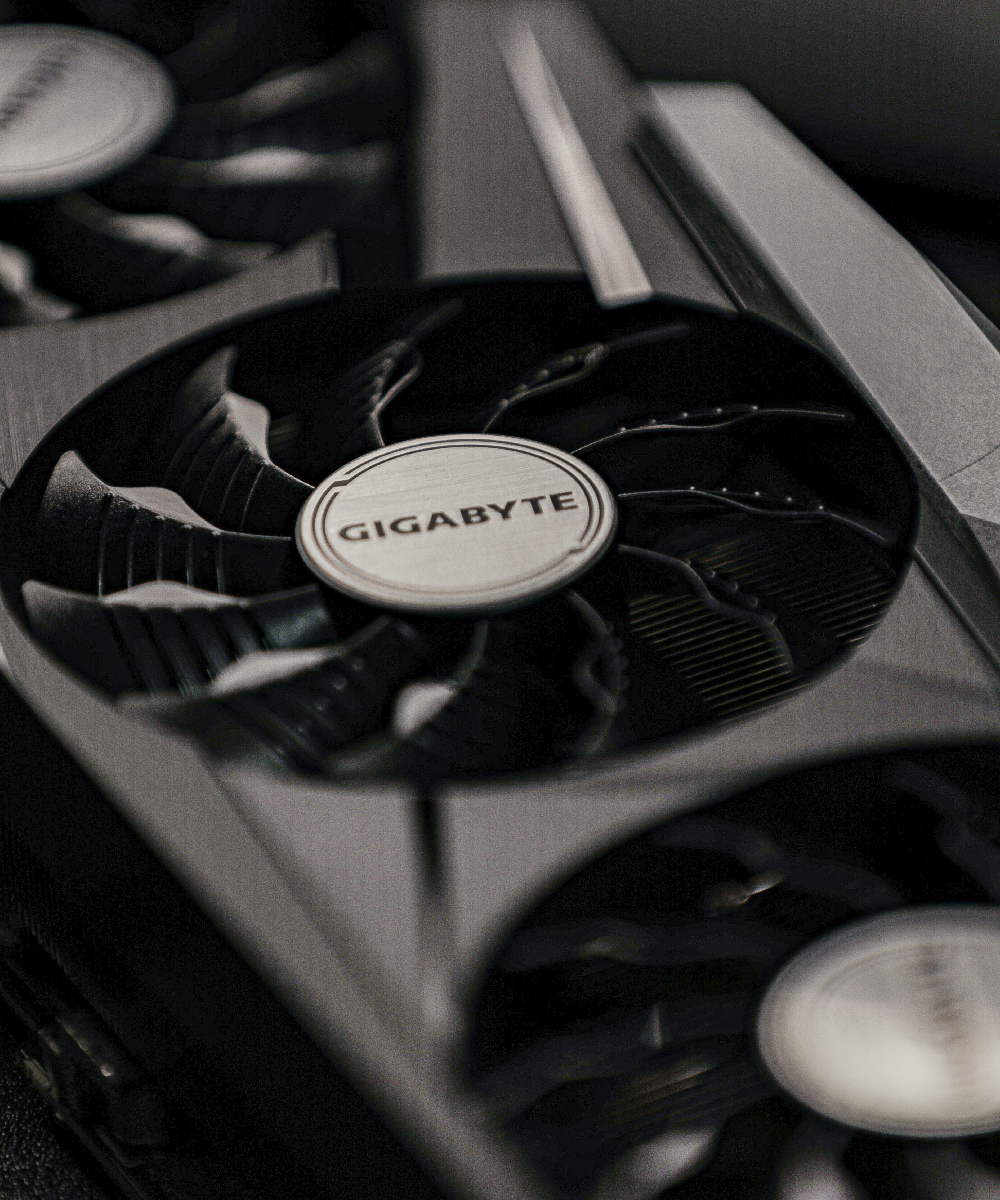

Custom hardware

Not only do we not rent GPUs from the cloud, but we create and run our own servers that are optimized to provide the most cost-effective inference while being fast.

Our servers are overclocked and validated for stability and performance through our extensive experience in PC hardware before being deployed.

Fine-tuned Models

We host our own ArliAI developed models and almost every fine-tuned model available on Hugging Face for any of the base models we offer.

We are able to do this as we use a high-rank LoRA loading method of hosting finetuned models.

This allows us to hotswap models on the fly as needed and allows unique models such as the Llama-3.05 models which are Llama-3-based models extracted against Llama-3.1 models.

No data mining

We aim to provide a secure API endpoint by having a strictly Zero-log policy.

Requests are sent from you to our servers fully encrypted. Ensuring only you can read the requests and responses of the LLM.

Contact Information

Contact us at contact@arliai.com or use our contact form if you have any questions or need support!